A.I. Eats Bias for Breakfast

3 October 2023

Human bias poses a real threat to effective decision-making, especially in HR, where someone's judgment can shape your career. Hiring managers have traditionally leaned on human judgment, often leading to selection biases favouring similar candidates over the most qualified ones. This unintentional bias stifles diversity and hampers progress. Preconceptions exist that algorithms extrapolate human bias. But what if A.I. could identify high-performing teams without bias?

Let’s discuss these preconceptions and have a look at research outcomes from Pera Labs in collaboration with companies:

Algorithms are opinions embedded in code.

- Yes, this certainly also applies to algorithms used in the labor market. But opinions are not necessarily a bad thing. The opinions of a manager and colleagues about how someone does their work are very valuable. Particularly if you ask multiple people (raters) to compare behaviours of colleagues at work and relate those behaviours to an objective outcome, for example number of released innovations or revenue contribution. But opinions based on prejudices, first impressions, or on unstructured interviews are of course of little value and should not be used as input to train algorithms.

The idea that you can fix discrimination and inequality from above with inclusive algorithms is not correct.

- Inclusive algorithms can provide solutions to combat discrimination and inequality. Diversity can be lost at every step of the recruitment process, from the words used in a vacancy text to the final decision on whether or not to hire someone. In some steps, algorithms can indeed contribute to combating discrimination and inequality.

- For example, some companies mainly (pre)select candidates based on top universities and fields of study. An algorithm that also takes other factors into account for example competencies and post-hiring information can (1) make a better pre-selection and (2) make a pre-selection with greater diversity in terms of universities/study options. An inclusive algorithm can look at many more factors at the same time than a human can, and therefore thinks much less in “boxes”.

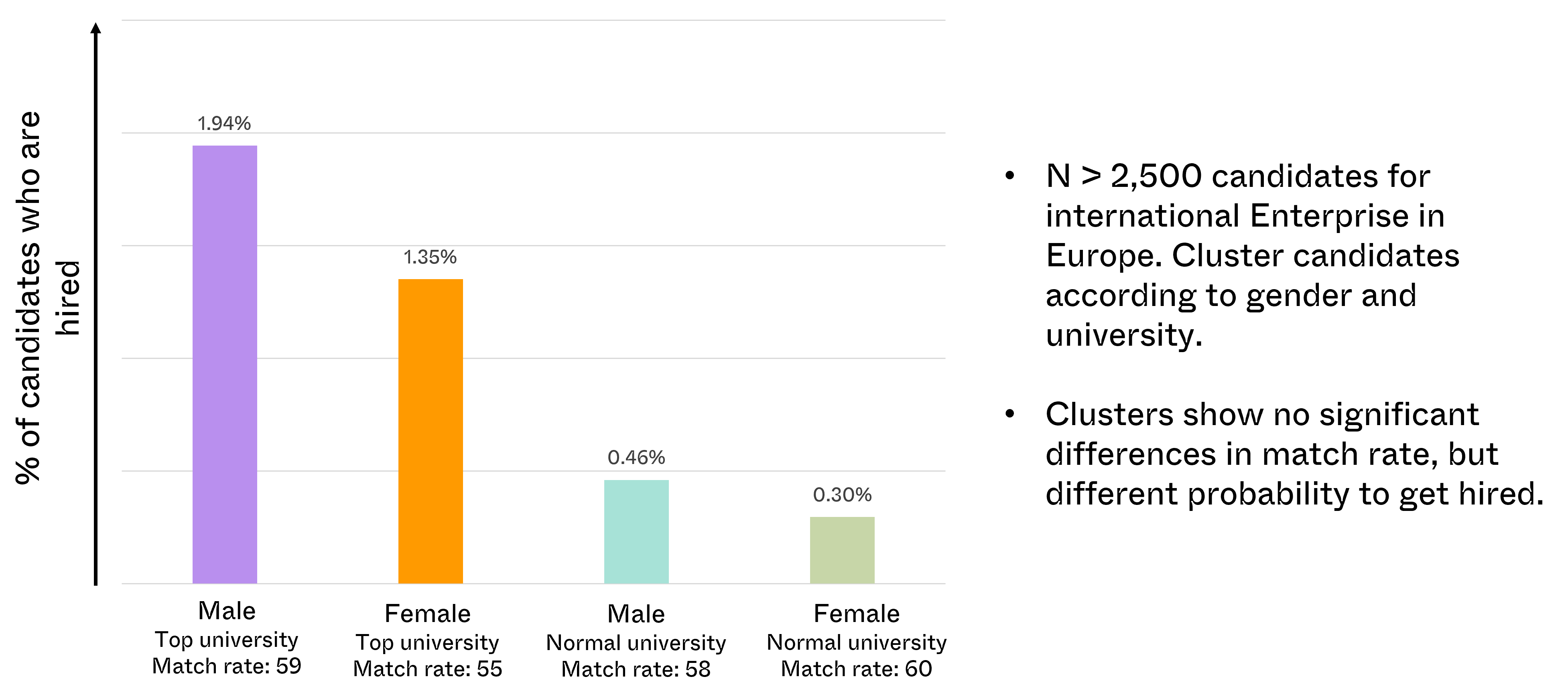

This research from Pera Labs with one company shows that human bias can’t be solved with good intentions. Female candidates without a top university degree have a 6.5x lower probability of being hired than male candidates with a top university degree, even if both groups score similarly on the competency assessment (match rate 60 and 59).

Unlike machines, people do have the ability to continuously flexibly adjust their biases in contact with each other.

- This perception is incorrect. Machines are far more flexible than humans and can easily be retrained with millions of data points. The number of meaningful interactions that a person has outside their own bubble is usually very small, which is why biases persist.

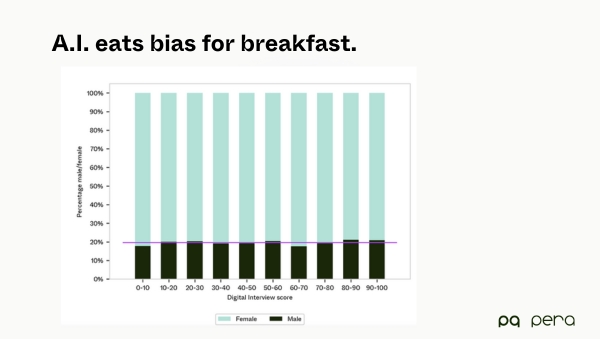

This research from Pera Labs shows that male and female candidates and university degrees don’t influence the ranking based on candidates’ competencies:

-png.png)

.jpg)